The importance of picking the right loss function

I don't know if Drucker thought a lot about artificial intelligence but he could have been when he said, 'what gets measured, gets managed. AI models learn through a repeated effort to minimise error. How we define error can wildly change how an AI learns and how useful it is in practice. Poor choices can lead to useless models and in the context of general AI, a poor choice might lead us to our doom. As AI influences more of how we spend our time, we must make sure we're measuring and optimising the right things. Today, we're almost certainly victims of bad optimisation. More and more online content is being filtered, sorted and delivered by AI and shows no sign of slowing down.

We build Artificial Intelligence models to take data and produce a useful output. AI learns to do this by processing large volumes of example scenarios. For each scenario, we provide the input data and the desired output. The AI takes the data, transforms it, and outputs something close to its target. The learning loop happens when the AI compares the generated output to the target output. It adapts itself to make fewer mistakes in the future by minimising a loss function. A loss function is a measurement of error generated by comparing the output to the target. We train AI until that error is low enough to be useful. Some systems continue to train in real-time on an endless quest to lower the loss function. As such a critical piece of the learning loop, the choice of loss function is crucial. Wrong choices can lead to unintended side effects.

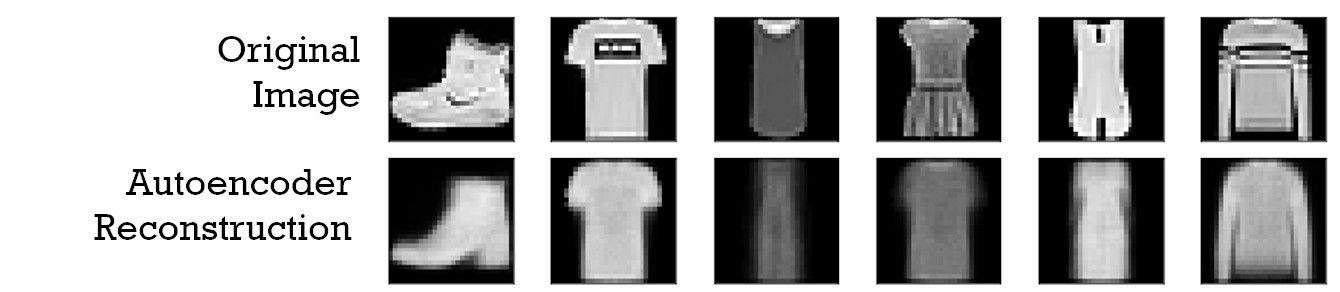

It may be helpful here to talk about a small example. There are a group of AI models known as autoencoders. How they work is not particularly important here. They have many uses, including removing noise from images. Here lets take the most straightforward version, which generates the same image we gave it as an input. The model has two stages, encoding and decoding. Encoding is taking the image and turning it into a compressed internal representation. Decoding takes that compressed representation and turns it back into an image. The goal of the model is for the decoded image to be as close to the original as possible. One way to measure this is to move through, pixel by pixel, and measure the difference in values. Averaging this across the image gives a single number that represents the error. That number is zero if the two are identical and is larger the more they differ. This is the model's loss function. At first glance, this seems like a sensible way to measure the error. In practice, it tends to result in blurry images. The AI finds that the best way to get a low loss function most of the time is to be okay on average. Some pixels are a little above, some are a little below but on average it is okay. Newer autoencoders have more complex loss functions, aiming to better capture our idea of a good match. When AI makes critical decisions, it is vital to pick a loss function that matches our goals.

Most AI in the world today is what we call narrow AI. It solves one particular problem in a tiny domain. General AI would be AI with wide-reaching capabilities in charge of larger systems. When designing General AI, a poor choice of loss function could be catastrophic. The go-to example here is an AI-controlled paperclip factory. In this factory sits an AI with a simple loss function. Make more paperclips. The more that get made, the lower the error but this error has no lower limit. In this scenario, the AI works through all the world's resources to make more paperclips. Eventually turning all matter in the universe into paperclips. Luckily for us, most paperclip factories would be turned off before world domination. However, general and narrow AI may operate on bad loss functions unnoticed in the real world. By the time we realise the error of our choices, it may be too late to intervene.

We're thankfully still a long way off a Skynet type AGI takeover. Search and media companies optimise for engagement to pay bills. They keep users coming back to view ads or keep their subscriptions active. What's becoming increasingly clear to me is that these loss functions aren't giving us what we really want. They're okay on average but lack the details we're really after. We receive a mix of highly polarised content there to get us fired up or content that hooks our minds but is a low drone of meaningless output. Everything we get is alright but even when it's dramatic, it's rarely surprising. This affects creators as well as consumers. So many people work to the whims of the "algorithm" doing what they need to do to fit in the blurry middle that keeps the loss function low. Those paying the bills likely argue that if we didn't like it we'd leave, let's but like cigarettes and lining walls with asbestos, there's a lot of money to be made before we come to our collective senses.