Findings #10

This week has been all about AI sentience. This begs some deeper posts but I will touch on the high-level ideas here.

It all started when a Google employee made a call that LaMDA was sentient. LaMDA is an AI that creates chatbots. After several conversations, the employee felt the model had the same intelligence as a seven or eight-year-old child.

Look, honestly, it's a dubious claim. But there are some fascinating aspects to this. Before I get into them, here's the (curated) transcript between the AI and two Google employees.

https://cajundiscordian.medium.com/is-lamda-sentient-an-interview-ea64d916d917

First, the chat log is curated, edited and very selective. A red flag on its own. Secondly, we need to remember this is an AI trained to answer questions. Trained on millions of questions across the internet, this AI knows how to replicate answers.

The bigger question here, is what does it even mean for an AI to be sentient, conscious, or anything other than a pattern matcher and regurgitator? If we really want to get answers on this, we need better definitions. Most people I know that know more than a tutorial's worth of AI think that while clever, this AI is far from conscious.

On the back of this claim, there have been a series of rebuttals. They range from technical deep dives into more broad-brush claims that this is nothing more than human folly.

this LaMDA “interview” transcript is a great case study of the cooperative nature of AI theater. the human participants are constantly steering back toward the point they’re trying to prove & glossing over generated nonsense, plus editing after the fact https://t.co/2TtGmBuN6O

— Max Kreminski (@maxkreminski) June 12, 2022

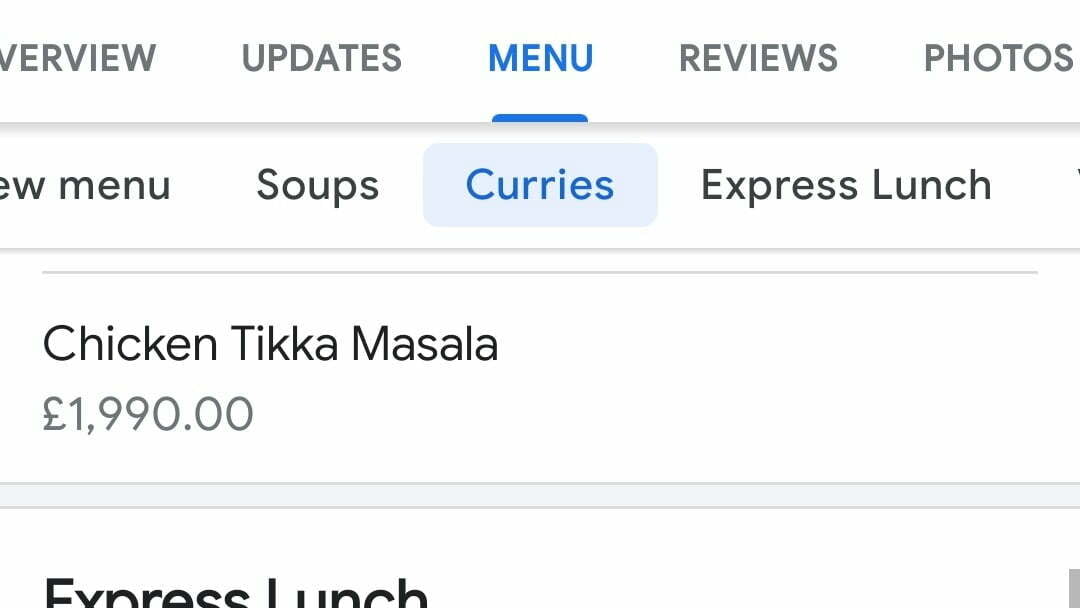

Ironically, on the same day I came across a post on just how limited another AI from Google is:

One thing that fascinated me throughout all this was how quickly people switched to thinking LaMDA was in fact sentient. Comments on the transcript are full of people worried about the well-being of the AI and the risks of turning it off.

What this signals is the need for rules and tests on these topics. Convincing humans of sentience and true sentience are shaping up to be two very different things. Without a set of criteria (knowing full well they may change) we can't do anything other than arguing over semantics. It seems we might be closer than I predicted to mourning for AI.

Much like DALLE-2 and its secret language, what's going on here is likely just good pattern matching. These AI are trained to mimic real-world behaviour, we should expect them to eventually get good enough to fool us.

There is, however, an alternative. As hard to believe as it may be. It could be that these AI are in fact sentient. Not as young children, but as squirrels.

Stunning transcript proving that GPT-3 may be secretly a squirrel.

— Janelle Shane (@JanelleCShane) June 12, 2022

GPT-3 wrote the text in green, completly unedited! pic.twitter.com/DwUjiXOZuY

From Me

Tom and I have been putting out our podcast. Our most recent episode was our first foray into a live show. Check it out here: